Mit der Data Reviewer-Lizenz verfügbar.

The Sampling check included with Data Reviewer allows you to create a sample set of features that are randomly selected from one or more layers. There are several methods that can be used to calculate the size of the sample and determine which feature layers are going to be included in the sample.

The sample is calculated based on one of the following methods:

- A fixed number of features

- A percentage of all the features in the specified extent

- A number derived from a calculation based on the confidence level, margin of error, and acceptance level

- A polygon grid that is loaded in the map or from a geodatabase

All the calculation methods use the weight values to determine the number of features that are going to be included as part of the sample.

A fixed number of features

When you specify a number of features to be included in the sample, the weights assigned to each layer are used to determine the number of features that are going to be included in the sample.

A percentage of all features in the extent or database

With the Sampling check, you can choose to generate a sample based on a percentage of all the features in the map. This means that out of all the features in the grid or extent, a specific percentage is selected for the sample. The weights are used to determine how many features from each layer are included as part of the sample.

Below are two examples that calculate the sample for a percentage of features in the dataset. The first one uses the same number of features in each feature layer, and the other uses different numbers of features in each layer.

The variables used in the examples are as follows:

| Variable | Description | Value for example 1 | Value for example 2 |

|---|---|---|---|

F | Number of features to sample | Varies for each layer | Varies for each layer |

L | Number of features in each layer | Varies for each layer | Varies for each layer |

W | Weight assigned to each layer | Varies for each layer | Varies for each layer |

WF | Weighted number of features | Varies for each layer | Varies for each layer |

N | Normalization factor | 3 | 2 |

S | Sampling percentage divided by 100 | 0.2 | 0.3 |

T | Total number of features | 1500 | 500 |

Example 1: Sampling layers with a consistent number of features

This first example finds the number of features to sample for each group layer shown below given a sampling percentage of 20. The number of features in each layer and the weights for each layer are shown below.

| Layer name | L | W | WF | F = S * (WF)/N |

|---|---|---|---|---|

Road L | 300 | 1 | 1500 | 100 |

WatrcrsL | 300 | 2 | 1200 | 80 |

ContourL | 300 | 3 | 900 | 60 |

PolbndL | 300 | 4 | 600 | 40 |

TreesA | 300 | 5 | 300 | 20 |

Total | 1500 | 4500 | 300 |

The calculation method for the sample is as follows:

- For each layer, calculate WF using L * ((5-W) + 1).

For example, for RoadL, WF is 1500.

- Calculate T using ∑L, which is 1500.

- Calculate N using ∑WF/∑L, which is 4500/1500 = 3.

- For each layer, calculate F using S * WF/N.

For example, for RoadL, this is calculated as .20 * 1500/3 = 100.

- Verify that ∑F = S * T.

For example, 300 = .20 * 1500.

Example 2: Sampling layers with a variable number of features

This example finds the number of features to sample for each group layer shown below given a sampling percentage of 30. The number of features in each layer and the weights for each layer are shown in the table below.

| Layer name | L | W | WF | F = S * (L*W)/N |

|---|---|---|---|---|

Road L | 100 | 3 | 300 | 45 |

WatrcrsL | 200 | 4 | 400 | 60 |

PolbndL | 50 | 3 | 150 | 22 |

TreesA | 150 | 5 | 150 | 23 |

Total | 500 | 1000 | 150 |

The calculation method for the sample is as follows:

- For each layer, calculate WF using L * ((5-W) + 1).

For example, for RoadL, WF is 300.

- Calculate T using ∑L, which is 500.

- Calculate N using ∑WF/∑L, which is 1000/500 = 2.

- For each layer, calculate F by using S * WF/N.

For example, for RoadL, this is .30 * 300/2 = 45.

- Verify that ∑F = S * T.

For example, 150 = .30 * 500.

A calculation based on the confidence level, margin of error and acceptance level

The automatic calculation method for determining the sample size is targeted towards organizations that want to answer the following questions using the Sampling check:

- Given a population size, what size sample do I need so that my sample size is statistically significant at a certain confidence interval, plus or minus an acceptable error in the confidence interval?

- Given my sample size, how many features can fail inspection before my entire dataset fails, given a certain target failure ratio, or percent?

The sample size is determined based on four factors:

- The probability ( p) of the outcome, that is, given a feature, the probability of a “pass” versus a “fail.” This value is maximized at 0.5; that is, since we have no prior knowledge of past probability that a certain percent of features from a given client will pass or fail, there is an equal probability of the features passing or failing, so 0.5 is the value used in the tool. 0.5 represents the most pessimistic (conservative) value when used in the equation for variance p(1- p). That is, p(1 - p) is maximized when p = 0.5.

- The population size (N).

- The acceptable margin of error in the confidence interval (m).

- The z-statistic for the desired confidence level (z). This is used to compare the sample to a normal distribution. The value is supplied by a lookup table.

For an infinite population, the equation for determining the sample size (n) is:

n = ((z/m)2)(p (1 - p))This value must then be truncated to conform to the actual population, which gives the actual sample size (n'):

n' = n(N)/(n + (N - 1))Failure threshold

The failure threshold value is given by the Test of Proportions equation. This equation determines whether the number of failures is significant enough to fail the entire dataset, given a population size, confidence interval, and specified failure ratio. Determination of failure threshold depends on three factors:

- Population size (n' from above)

- The acceptable maximum failure ratio (r)

- The z-statistic for the desired confidence interval (z), which is used to compare the sample to a normal distribution. This value is supplied by a lookup table.

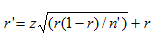

The maximum failure ratio allowable (r') is given by this equation:

Since this is a ratio, the resulting value must then be multiplied by the sample size to get the maximum number of failures allowable (f):

Remediation

If a given dataset fails to pass (that is, the number of actual failures exceeds the maximum allowable number of failures), it is not sufficient to fix the failures that were detected, then pass the dataset. If a dataset fails, it means that the sample has revealed a deficiency with the entire dataset, not just the detected failures. The quality of the entire dataset will have to be improved to pass a retest based on a new random sample.

References

The following references were used to determine the equations used by the Sampling check:

Burt, J., and G. Barber. Elementary Statistics for Geographers. New York: The Guilford Press. 1996.

McGrew, J., and C. Monroe. Introduction to Statistical Problem Solving in Geography, Second Edition. McGraw-Hill. 2000.

A polygon grid in the map or loaded from a geodatabase

A polygon grid allows you to divide a large dataset into smaller sections. These sections can be used to assign areas of responsibility for quality control (QC) tasks or as the extents for map sheets. Using a polygon grid with the Sampling check allows you to select the number of grid cells you would like to have randomly selected. From those randomly selected cells, the Sampling check will select features from the feature classes you want to include with the sample. This allows you to perform QC on a specified percentage of grids (map sheets).