Authoring a geoprocessing service entails authoring the tasks within the service. Authoring a task means selecting or creating a tool, defining the input and output parameters of the task, and the location of any data used by the task. Here are the steps in a bit more detail:

- Selecting one or more geoprocessing tools that will become the tasks in your service. You can use one of the many system tools delivered with ArcGIS or create your own tools using ModelBuilder or Python scripting.

- Gathering the input data necessary to execute the tool. Typically, these datasets are layers in the ArcMap table of contents.

- Executing the tool to create a result in the Results window.

- Defining the symbology of the input and output datasets, if necessary.

- Sharing the result as a geoprocessing service. When you share a result, you use the Service Editor to define the input modes of the datasets (described below) along with other properties of the service and its tasks.

Example: a simple supply and demand model

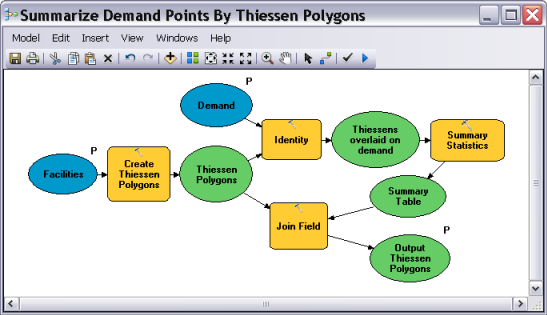

Illustrated below is an example of a tool created with ModelBuilder that is to be made into a task. The model (and published task) is a very simple supply and demand assignment model; given a set of point locations representing supply of services (such as emergency response or retail facilities) and another set of points representing demand (such as households or businesses that require the service), assign the demand points to the closest facility, create a trade area polygon for each facility, and sum up all demand within the facilities trade area. The output is a set of polygons, one polygon for each input facility with an attribute containing total demand within the polygon.

Note that in the above model, the Facilities, Demand, and Output Thiessen Polygons variables are model parameters (they have a P next to them). These model parameters become the task arguments that clients of your task provide when they execute the task.

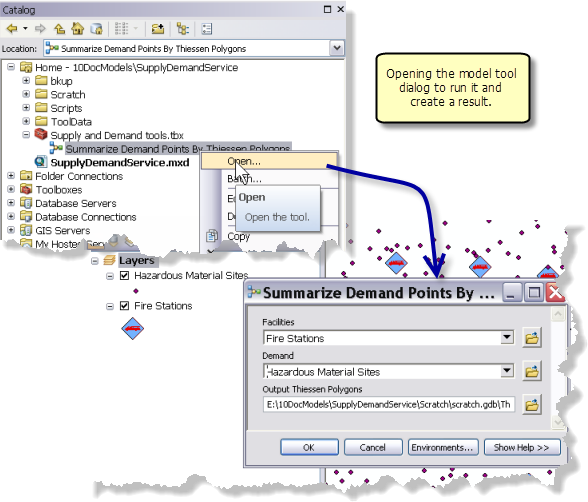

Model parameters appear on the tool dialog box, as illustrated below, where the model tool is opened from the Catalog window.

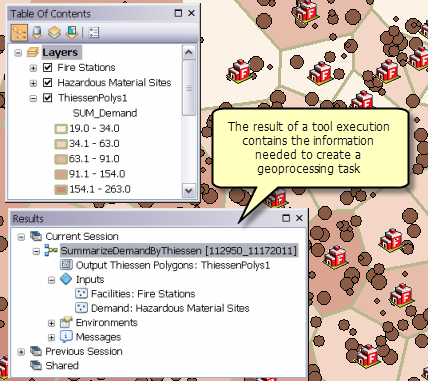

When the model is run as a tool, a result is written to the Results window as illustrated below. The result contains all the information ArcGIS needs to construct a task.

- The name and location of the toolbox and tool that created the result.

- The output (ThiessenPolys1) produced by running the tool.

- The schema (the feature type and attributes) of the output.

- The symbology of the output. In this example, the output symbology is a graduated color based on the sum of demand within each polygon.

- The inputs to the tool (the Fire Stations and Hazardous Material Sites layers).

- The schema (the feature type and attributes) of each input. In this example, Fire Stations have one text attribute: FacilityName. Hazardous Material Sites have one attribute as well: Demand, a long integer.

- The symbology of the input. In the example, Fire Stations layer is symbolized with a fire station symbol for each location. and Demand is symbolized with graduated circles.

- Geoprocessing environment settings that were in effect when the tool was executed.

One other important aspect of a result is that it is part of an ArcMap session and so it has access to all the layers and tables in the Table Of Contents and your published task can have access to these layers as well. The Table Of Contents illustrated above only contains the two input layers and the output, but it could contain many more layers (such as a layer of retail locations) that can be made available to clients of the task.

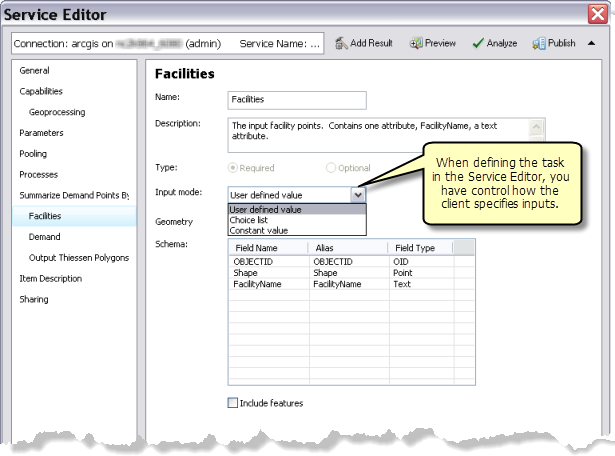

A geoprocessing service and its initial task are created by right-clicking a result and choosing Share As > Geoprocessing Service. This opens the Share as Service wizard, and after selecting a server connection and choosing a name for the service, the Service Editor opens. The Service Editor is where you define properties and settings of your service as well as properties for individual task parameters. The illustration below shows setting the Input mode of the Facilities parameter. In this example, the Input mode is set to User defined value which means the client is expected to provide a set of points with a text attribute FacilityName.

The main point here is that the Service Editor gives you a fine degree of control over the definition of the inputs and outputs of your task. Although this occurs during the sharing process, it can have impact on how you author your tool.

Example: other input modes

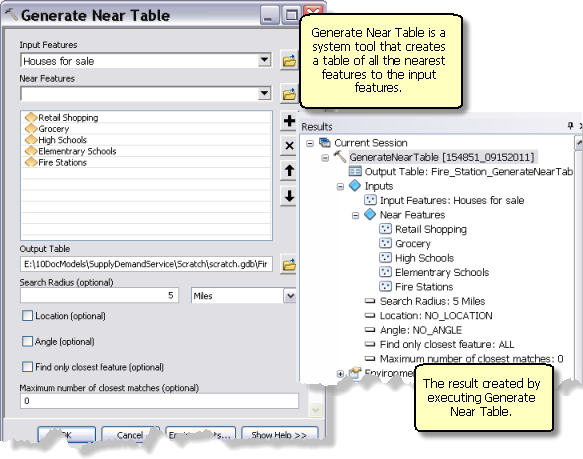

The Generate Near Table tool is a system tool that calculates the nearest features to input features and returns the results in a table. In this example, Generate Near Table is used to return all the elementary schools, high schools, fire stations, grocery stores, and retail stores within five miles of any input point. In this scenario, the input points are considered to be houses for sale—this is a simple service designed to help home buyers evaluate houses for sale. All dataset inputs are layers in the ArcMap table of contents.

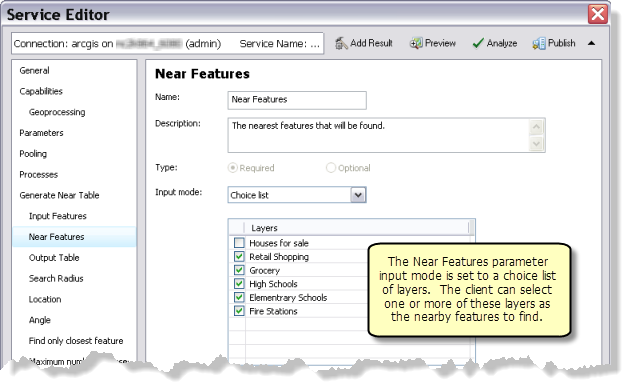

When the result is shared as a geoprocessing task, the Near Features can be a Choice list of nearby features to search, as illustrated below. The client will specify one or more of these layers. For example, the client may only be interested in the nearest High Schools and Elementary Schools, but none of the other layers.

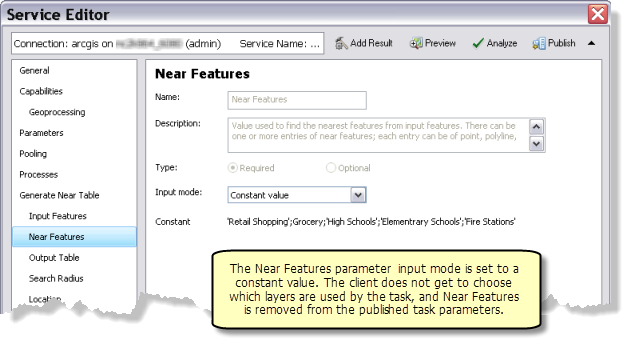

Or, alternatively, the Near Features can be fixed—a Constant value—in which case it is no longer an argument to the task, and the client has no control over which Near Features are returned; they always get a table of all the nearest features.

Input modes

In the examples above, you saw how setting Input mode is an important task design and authoring decision. Setting the input mode is something you do in the Service Editor as this pertains only to geoprocessing tasks and not the underlying tool. There are three input modes for input features:

- User defined value: The client will create a feature set that will be transported across the Internet to be read by the task. When User defined value is specified, the feature type, attributes, spatial reference, and symbology of the input layer is stored with the feature set. Clients can retrieve this schema and use it to digitize features into the feature set or load features from a file on disk into the feature set. For input tables, there is a corresponding record set that stores the attribute schema of the table.

- Choice list: The client can enter the names of one (or more) of the layers you check in the list of layers. Whether the client can enter more than one layer depends on the tool. The Generate Near Table tool shown above allows multiple layers to be input. Other tools, such as Buffer, only allow one layer from the choice list to be input.

- Constant value: The task will use the value you specified when you ran the tool. Constant value is the same as removing the parameter—the layer (dataset) is used internally by your task and not exposed to the client.

Example: Script tool

You can also create a task using a script tool. The illustration below shows the property page of a script tool that does the exact same work as the simple supply and demand model above, only using Python instead of ModelBuilder. It has the same parameters as the model and the same procedure is used to create a task: run the tool to create a result, then share the result as a geoprocessing service.

Project data and the data store

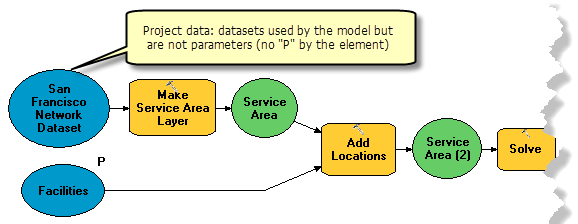

Project data is a term used by geoprocessing to describe input data that is not a parameter; that is, the data is not supplied by the user of the tool or task, but is used internally by the tool or task. For example, the San Francisco Network Dataset variable in the model below is project data because it is used by the model but not exposed as a parameter. Essentially, a model's project data is a blue oval without a P next to it.

Project data can appear in scripts as well, as shown in the Python code snippet below.

import arcpy

# The inputPoints variable is considered to be project data

# since it is not an input parameter.

#

inputPoints = r"c:\data\Toronto\residential.gdb\shelters"

arcpy.Buffer_analysis(inputPoints, 'shelterBuffers', '1500 Meters')

In the Service Editor, an input data parameter that has an Input mode of Constant value is equivalent to project data—it is data used by the tool but not exposed as a task argument. You can think of an input mode of Constant value as removing the P next to the variable in ModelBuilder.

Project data is an important consideration when authoring and sharing a task because the project data has to be accessible by the server when the task executes. Geoprocessing services tend to use a lot of different data in different locations. It's not untypical that problems in your service can be traced back to project data access issues.

When you publish your result, the publishing processes scan all the models and scripts used to produce the result and discovers your project data. It then determines what to do with this data. There are only two choices:

- If the project data can be found in the server's data store, the published task will use the data in the data store.

- If the project data cannot be found in the server's data store, it is copied to the server, placed in a known location accessible by the server (the server's input directory), and the published task will use the copied data. The copied data does not become part of the server's data store. If you republish the task, the data will be copied again.

About the data store

Each ArcGIS Server installation contains a data store. The data store is a way for you to give the server a list of data locations that the server can access. When the data can be accessed by the server, the data found on your local machine will not be copied to the server when publishing.

For more information about registering your data with ArcGIS server, see:

Simple data in, simple data out

A GIS service has to work with the simplest of all clients: a web browser running on a computer that does not have any GIS capabilities. Such simple clients know only how to send packets of simple data to a server, such as text, numbers, tables, and geographic features and their attributes. These clients are unaware of more advanced geographic data representations that you use in ArcGIS for Desktop, such as network datasets, topologies, TINs, relationship classes, geometric networks, and so on. These datasets are known as complex datasets; they model complex relationships between simple features. Complex datasets cannot be transported across the Internet; only simple features, tables, rasters, and files can be transported.

When authoring a task, you need to be aware that all clients, whether they are web applications, Explorer for ArcGIS, or ArcGIS for Desktop, only know how to send and receive (transport) these simple datasets. Even if you know your service will only be accessed by sophisticated clients such as ArcMap, you are still limited to simple input data when authoring your tasks.

See the topic Input modes and parameter data types for more information about transportable and nontransportable datasets.

Drawing task results with a map service

When publishing a geoprocessing service, you can choose to view the result of your task as a map (in addition to any other results of your task). The map is created on the server using ArcMap, then transported back to the client. The symbology, labeling, transparency, and all other properties of the returned map are the same as the output layer in your current ArcMap session. The workflow to create a result map service is simple:

- Run your tool to create a result in the Result window. Any data created by your tool will be added to the ArcMap table of contents as a layer.

- Change the symbology of layers that are the output of your tool.

- Right-click the result and share as a geoprocessing service.

- In the Service Editor, click Parameters and enable View result with a map service.

If your output layer is drawing unique values, you may need to uncheck <all other values> in the layer properties to force recalculation of the unique values prior to drawing. See the topic Creating a layer symbology file for more details.

Documenting your task

Providing good documentation for your service and tasks is essential if you want your service to be discovered, understood, and used by the widest audience possible.

Good documentation starts with the tool that created the result you're publishing. Every tool in the system has an item description that can be viewed an edited by right-clicking the tool in the Catalog window and choosing Item Description.

Learn more about documenting geoprocessing services and tasks

Geoprocessing environment settings

Geoprocessing environment settings are additional parameters that affect a tool's results. They differ from normal tool parameters in that they don't appear on a tool's dialog box (with certain exceptions). Rather, they are values you set once using a separate dialog box and are interrogated and used by tools when they are run.

Environment settings hierarchy

There are several ways you can change environment settings, and where you make the changes can be thought of as a level in a hierarchy. Environment settings are passed down from level to level. At each level, you can override the passed-down environment settings with another setting.

- Application level—in the main menu, click Geoprocessing > Environments. Changes you make here will affect the execution of any tool.

- Tool level—on a tool's dialog box, click the Environments button. This will open the Environments Settings dialog box. Note that all environment settings, regardless of whether the tool honors them or not, are listed in the Environments settings dialog box. You will need to consult the tool's reference page in order to determine if the environment is honored by the tool. Any settings you make here will override settings made at the application level. These setting only apply to the execution of the tool; application-level settings are not overwritten.

- Model, model process, or script level—in a model or script, you have a high degree of control over environment settings. You can change an environment for a particular tool, a set of tools, or for every tool in the model or script. The settings you make at this level override all settings made at the tool or application level.

Environment settings are stored in a result

A result in the Results window stores all environment settings made at the application or tool level. When your task executes on the server, the environment settings in the result are used during task execution. However, settings you make at the model or script level are not shown in the result but will be used during task execution. You can think of the environment settings in the result as being passed onto the model or script tool which is then free to override the settings.