Summary

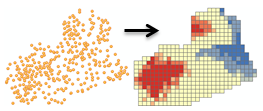

Given incident points or weighted features (points or polygons), creates a map of statistically significant hot and cold spots using the Getis-Ord Gi* statistic. It evaluates the characteristics of the input feature class to produce optimal results.

Illustration

Usage

This tool identifies statistically significant spatial clusters of high values (hot spots) and low values (cold spots). It automatically aggregates incident data, identifies an appropriate scale of analysis, and corrects for both multiple testing and spatial dependence. This tool interrogates your data in order to determine settings that will produce optimal hot spot analysis results. If you want full control over these settings, use the Hot Spot Analysis tool instead.

The computed settings used to produce optimal hot spot analysis results are reported in the Results window. The associated workflows and algorithms are explained in How Optimized Hot Spot Analysis works.

This tool creates a new Output Feature Class with a z-score, p-value and confidence level bin (Gi_Bin) for each feature in the Input Feature Class.

The Gi_Bin field identifies statistically significant hot and cold spots, corrected for multiple testing and spatial dependence using the False Discovery Rate (FDR) correction method. Features in the +/-3 bins (features with a Gi_Bin value of either +3 or -3) are statistically significant at the 99 percent confidence level; features in the +/-2 bins reflect a 95 percent confidence level; features in the +/-1 bins reflect a 90 percent confidence level; and the clustering for features with 0 for the Gi_Bin field is not statistically significant.

The z-score and p-value fields do not reflect any kind of FDR (False Discovery Rate) correction. For more information on z-scores and p-values, see What is a z-score? What is a p-value?

When the Input Feature Class is not projected (that is, when coordinates are given in degrees, minutes, and seconds) or when the output coordinate system is set to a Geographic Coordinate System, distances are computed using chordal measurements. Chordal distance measurements are used because they can be computed quickly and provide very good estimates of true geodesic distances, at least for points within about thirty degrees of each other. Chordal distances are based on an oblate spheroid. Given any two points on the earth's surface, the chordal distance between them is the length of a line, passing through the three-dimensional earth, to connect those two points. Chordal distances are reported in meters.

The Input Features may be points or polygons. With polygons, an Analysis Field is required.

If you provide an Analysis Field, it should contain a variety of values. The math for this statistic requires some variation in the variable being analyzed; for example, it cannot solve if all input values are 1.

With an Analysis Field, this tool is appropriate for all data (points or polygons) including sampled data. In fact, this tool is effective and reliable even in cases where there is oversampling. With lots of features (oversampling) the tool has more information to compute accurate and reliable results. With few features (undersampling), the tool will still do all it can to produce accurate and reliable results, but there will be less information to work with.

Because the underlying Getis-Ord Gi* statistic used by this tool is asymptotically normal, even when the Analysis Field contains skewed data, results are reliable.

With point data you will sometimes be interested in analyzing data values associated with each point feature and will consequently provide an Analysis Field. In other cases you will only be interested in evaluating the spatial pattern (clustering) of the point locations or point incidents. The decision to provide an Analysis Field or not will depend on the question you are asking.

- Analyzing point features with an Analysis Field allows you to answer questions like Where do high and low values cluster?

- The analysis field you select might represent the following:

- Counts (such as the number of traffic accidents at street intersections)

- Rates (such as city unemployment, where each city is represented by a point feature)

- Averages (such as the mean math test score among schools)

- Indices (such as a consumer satisfaction score for car dealerships across the country)

- Analyzing point features when there is no Analysis Field allows you to identify where point clustering is unusually (statistically significant) intense or sparse. This type of analysis answers questions like Where are there many points? Where are there very few points?

When you don't provide an Analysis Field the tool will aggregate your points in order to obtain point counts to use as an analysis field. There are three possible aggregation schemes:

- For COUNT_INCIDENTS_WITHIN_FISHNET_POLYGONS, an appropriate polygon cell size is computed and used to create a fishnet polygon mesh. The fishnet is positioned over the incident points and the points within each polygon cell are counted. If no Bounding Polygons Defining Where Incidents Are Possible feature layer is provided, the fishnet cells with zero points are removed and only the remaining cells are analyzed. When a bounding polygon feature layer is provided, all fishnet cells that fall within the bounding polygons are retained and analyzed. The point counts for each polygon cell are used as the analysis field.

- For COUNT_INCIDENTS_WITHIN_AGGREGATION_POLYGONS, you need to provide the Polygons For Aggregating Incidents Into Counts feature layer. The point incidents falling within each polygon will be counted and these polygons with their associated counts will then be analyzed. The COUNT_INCIDENTS_WITHIN_AGGREGATION_POLYGONS is an appropriate aggregation strategy when points are associated with administrative units such as tracts, counties, or school districts. You might also use this option if you want the study area to remain fixed across multiple analyses to enhance making comparisons.

- For SNAP_NEARBY_INCIDENTS_TO_CREATE_WEIGHTED_POINTS, a snap distance is computed and used to aggregate nearby incident points. Each aggregated point is given a count reflecting the number of incidents that were snapped together. The aggregated points are then analyzed with the incident counts serving as the analysis field. The SNAP_NEARBY_INCIDENTS_TO_CREATE_WEIGHTED_POINTS option is an appropriate aggregation strategy when you have many coincident, or nearly coincident, points and want to maintain aspects of the spatial pattern of the original point data. In many cases you will want to try both SNAP_NEARBY_INCIDENTS_TO_CREATE_WEIGHTED_POINTS and COUNT_INCIDENTS_WITHIN_FISHNET_POLYGONS and see which result best reflects the spatial pattern of the original point data. Fishnet solutions can artificially separate clusters of point incidents, but the output may be easier for some people to interpret than weighted point output.

When you select COUNT_INCIDENTS_WITHIN_FISHNET_POLYGONS for the Incident Data Aggregation Method, you may optionally provide a Bounding Polygons Defining Where Incidents Are Possible feature layer. When no bounding polygons are provided, the tool cannot know if a location without an incident should be a zero to indicate that an incident is possible at that location, but didn't occur, or if the location should be removed from the analysis because incidents would never occur at that location. Consequently, when no bounding polygons are provided, only fishnet cells with at least one incident are retained for analysis. If this isn't the behavior you want, you can provide a Bounding Polygons Defining Where Incidents Are Possible feature layer to ensure that all locations within the bounding polygons are retained. Fishnet cells with no underlying incidents will receive an incident count of zero.

Any incidents falling outside the Bounding Polygons Defining Where Incidents Are Possible or the Polygons For Aggregating Incidents Into Counts will be excluded from analysis.

If you have the ArcGIS Spatial Analyst extension you can choose to create a Density Surface of your point Input Features. With point Input Features, the Density Surface parameter is enabled when you specify an Analysis Field or select the SNAP_NEARBY_INCIDENTS_TO_CREATE_WEIGHTED_POINTS for the Incident Data Aggregation Method. The output Density Surface will be clipped to the raster analysis mask specified in the environment settings. If no raster mask is specified, you will need an Advanced license, as the output raster layer will be clipped to a convex hull around the Input Features.

You should use the Generate Spatial Weights Matrix and Hot Spot Analysis (Getis-Ord Gi*) tools if you want to identify space-time hot spots. More information about space-time cluster analysis is provided in the Space-Time Cluster Analysis topic.

-

Map layers can be used to define the Input Feature Class. When using a layer with a selection, only the selected features are included in the analysis.

-

The Output Features layer is automatically added to the table of contents with default rendering applied to the Gi_Bin field. The hot-to-cold rendering is defined by a layer file in <ArcGIS>/Desktop10.x/ArcToolbox/Templates/Layers. You can reapply the default rendering, if needed, by importing the template layer symbology.

Syntax

OptimizedHotSpotAnalysis_stats (Input_Features, Output_Features, {Analysis_Field}, {Incident_Data_Aggregation_Method}, {Bounding_Polygons_Defining_Where_Incidents_Are_Possible}, {Polygons_For_Aggregating_Incidents_Into_Counts}, {Density_Surface})| Parameter | Explanation | Data Type |

Input_Features | The point or polygon feature class for which hot spot analysis will be performed. | Feature Layer |

Output_Features | The output feature class to receive the z-score, p-value, and Gi_Bin results. | Feature Class |

Analysis_Field (Optional) | The numeric field (number of incidents, crime rates, test scores, and so on) to be evaluated. | Field |

Incident_Data_Aggregation_Method (Optional) | The aggregation method to use to create weighted features for analysis from incident point data.

| String |

Bounding_Polygons_Defining_Where_Incidents_Are_Possible (Optional) | A polygon feature class defining where the incident Input_Features could possibly occur. | Feature Layer |

Polygons_For_Aggregating_Incidents_Into_Counts (Optional) | The polygons to use to aggregate the incident Input_Features in order to get an incident count for each polygon feature. | Feature Layer |

Density_Surface (Optional) | The output density surface of point input features. This parameter is only enabled when Input_Features are points and you have the ArcGIS Spatial Analyst extension. The output surface created will be clipped to the raster analysis mask specified in your environment settings. If no raster mask is specified, you will need an Advanced license as the output raster layer will be clipped to a convex hull around the Input Features. | Raster Dataset |

Code sample

OptimizedHotSpotAnalysis example 1 (Python window)

The following Python window script demonstrates how to use the OptimizedHotSpotAnalysis tool.

import arcpy

arcpy.env.workspace = r"C:\OHSA"

arcpy.OptimizedHotSpotAnalysis_stats("911Count.shp", "911OptimizedHotSpots.shp", "#", "SNAP_NEARBY_INCIDENTS_TO_CREATE_WEIGHTED_POINTS", "#", "#", "calls911Surface.tif")

OptimizedHotSpotAnalysis example 2 (stand-alone Python script)

The following stand-alone Python script demonstrates how to use the OptimizedHotSpotAnalysis tool.

# Analyze the spatial distribution of 911 calls in a metropolitan area

# Import system modules

import arcpy

# Set property to overwrite existing output, by default

arcpy.env.overwriteOutput = True

# Local variables...

workspace = r"C:\OHSA\data.gdb"

try:

# Set the current workspace (to avoid having to specify the full path to the feature classes each time)

arcpy.env.workspace = workspace

# Create a polygon that defines where incidents are possible

# Process: Minimum Bounding Geometry of 911 call data

arcpy.MinimumBoundingGeometry_management("Calls911", "Calls911_MBG", "CONVEX_HULL", "ALL",

"#", "NO_MBG_FIELDS")

# Optimized Hot Spot Analysis of 911 call data using fishnet aggregation method with a bounding polygon of 911 call data

# Process: Optimized Hot Spot Analysis

ohsa = arcpy.OptimizedHotSpotAnalysis_stats("Calls911", "Calls911_ohsaFishnet", "#", "COUNT_INCIDENTS_WITHIN_FISHNET_POLYGONS",

"Calls911_MBG", "#", "#")

except:

# If any error occurred when running the tool, print the messages

print(arcpy.GetMessages())

Environments

Licensing information

- ArcGIS Desktop Basic: Yes

- ArcGIS Desktop Standard: Yes

- ArcGIS Desktop Advanced: Yes