In this topic

- Creating a plug-in data source

- SimplePoint plug-in data source

- Implementing a plug-in workspace factory helper

- Implementing a plug-in workspace helper

- Implementing a plug-in dataset helper

- Implementing a plug-in cursor helper

- Deploying PlugIn Data Sources

A plug-in data source integrates a new data format completely into ArcGIS. ArcGIS works directly with this data, as it does with other ArcGIS supported data formats. The four key concepts of geodatabase that you must understand when implementing a plug-in data source are workspace factory, workspace, dataset and a cursor.

- A workspace factory is a dispenser of workspaces and allows a client to create or connect to a workspace specified by a set of connection properties. Every data format supported in ArcGIS has a corresponding workspace factory class. Example: ShapefileWorkspaceFactory

- A Workspace is a container of spatial and non-spatial datasets(example feature classes, raster datasets and tables). It provides methods to instantiate existing datasets and to create new datasets

- A dataset is a collection of related data, usually stored or grouped together

- A cursor allows you to browse the data, For example the IFeatureCursor interface provides access to a set of features in a feature class.

Since a plug-in data source is a custom data source, you must create your own extension classes such as PlugIn WorkspaceFactory, PlugIn Workspace, PlugIn Dataset and PlugIn Cursor to integrate the data into ArcGIS. The extension classes are simple POJOs annotated with @ArcGISExtension that you could create by implementing specific ArcObject interfaces. The following sections describe the implementation of the extension classes that are required to integrate your custom data source with an example. The example code is also available as a sample SimplePointPlugInDataSource.

SimplePoint plug-in data source

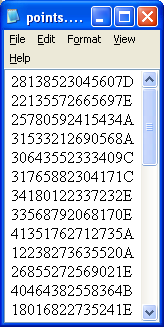

The example SimplePoint custom data source described in the code snippet is a text file containing geographic locations. The data in the text file has an unusual format and must be integrated in ArcGIS. Converting the data each time a new file is received is impractical. Therefore to access it from ArcCatalog, This can be accomplished by implementing a plug-in data source.

The following screen shot shows the simple point data format. An American Standard Code for Information Interchange (ASCII) text file contains data for each new point on a new line. The first six characters are the x-coordinate, the next six characters contain the y-coordinate, and the trailing characters contain an attribute value.

A workspace factory helper class must implement the IPlugInWorkspaceFactoryHelper interface. This helper class works in conjunction with the existing ArcGIS PlugInWorkspaceFactory class. The workspace factory helper class must be annotated with @ArcGISExtension and the category attribute of the extension must be specified as ArcGISCategories.PlugInDataSource.

@ArcGISExtension(categories = {

ArcGISCategories.PlugInDataSource

}

)public class SimplePointWorkspaceFactoryHelper implements

IPlugInWorkspaceFactoryHelper{}

The PlugInWorkspaceFactory class implements IWorkspaceFactory and uses the plug-in workspace factory helper to get information about the data source and to browse for workspaces; together they act as a workspace factory for the data source.

The most important part of the implementation is the return value of IPlugInWorkspaceFactoryHelper.getWorkspaceFactoryTypeID() method. It should return a class identifier (CLSID) that does not refer to any implementation. The CLSID of the plug-in data source can be returned by using the method EngineUtilities.getWorkspaceFactoryTypeID() method. The CLSID is used as an alias for the workspace factory of the data source that is created by PlugInWorkspaceFactory. See the following code example:

public IUID getWorkspaceFactoryTypeID()throws IOException, AutomationException{

return EngineUtilities.getWorkspaceFactoryTypeID

(SimplePointWorkspaceFactoryHelper.class);

}

The remaining implementation of IPlugInWorkspaceFactoryHelper is relatively straightforward. The most difficult member to implement is often getWorkspaceString. The workspace string is used as a lightweight representation of the workspace.

The plug-in is the sole consumer ( isWorkspace and openWorkspace) of the strings, so their content is the developer's decision. For many data sources, including this example, the path to the workspace is chosen as the workspace string. One thing to note about getWorkspaceString is the FileNames parameter. This parameter may be null, in which case isWorkspace() should be called to determine if the directory is a workspace of the plug-in's type. If the parameter is not null, examine the files in FileNames to determine if the workspace is of the plug-in's type. Also, remove any files from the array that belong to the plug-in data source. This behavior is comparable to that of IWorkspaceFactory.getWorkspaceName().

The getDataSourceName() method is simple to implement—just return a string representing the data source. The example returns SimplePoint. This is the only text string that should not be localized. The other strings (for example, by using a resource file) should be localized if the plug-in data source could be used in different countries. For simplicity, the example does not localize its strings.

The openWorkspace() method creates an instance of the next class that must be implemented, the plug-in workspace helper. A way of initializing the workspace helper with the location of the data is needed. See the following code example:

/**

* Opens a workspace helper for the workspace identified by the workspace string.

*/

public IPlugInWorkspaceHelper openWorkspace(String arg0)throws IOException,

AutomationException{

// Return null if the parameter is null.

if (arg0 == null)

return null;

// Make sure the workspace string is a directory.

File file = new File(arg0);

if (!file.isDirectory())

return null;

// Instantiate and return a new workspace helper for the specified workspace.

SimplePointWorkspaceHelper sptWorkspaceHelper = new SimplePointWorkspaceHelper

(arg0);

return sptWorkspaceHelper;

}

Plug-in workspace factories can also implement the optional interface IPlugInCreateWorkspace to support creation of workspaces for a plug-in data source.

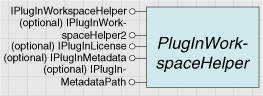

Implementing a plug-in workspace helper

A plug-in workspace helper represents a single workspace for datasets of the data source type. The class does not need to be publicly creatable, as the plug-in workspace factory helper is responsible for creating it in its openWorkspace method. See the following illustration:

The class must implement IPlugInWorkspaceHelper and must be annotated with @ArcGISExtension annotation. The IPlugInWorkspaceHelper interface allows the data source's datasets to be browsed. The most noteworthy member is openDataset, which creates and initializes an instance of a plug-in dataset helper. See the following code example:

@ArcGISExtension public class SimplePointWorkspaceHelper implements

IPlugInWorkspaceHelper, IPlugInMetadataPath{

/**

* Opens a dataset help for the dataset identified by the parameter.

*/

public IPlugInDatasetHelper openDataset(String arg0)throws IOException,

AutomationException{

// Append the path and the dataset name to get the dataset's full path, then make sure the dataset exists.

String fileName = this.workspacePath + File.separator + arg0 +

SimplePointWorkspaceFactoryHelper.fileExtension;

File file = new File(fileName);

if (!file.exists())

return null;

// Create and return a new dataset helper.

SimplePointDatasetHelper sptdh = new SimplePointDatasetHelper(workspacePath,

arg0);

return sptdh;

}

//Implement other methods

}

If the canSupportSQL property of IPlugInWorkspaceFactoryHelper returns true, the plug-in workspace helper should implement the ISQLSyntax interface. In this case, the workspace object delegates calls to its ISQLSyntax to the interface on this class. The ArcGIS framework passes WHERE clauses to the IPlugInDatasetHelper.fetchAll and fetchByEnvelope methods, and the cursors returned by these functions should contain only rows that match the WHERE clause.

If canSupportSQL returns false, the ArcGIS framework does not pass WHERE clauses but handles the postquery filtering. The advantage of implementing support for WHERE clauses is that queries can be processed on large datasets more efficiently than with a postquery filter. The disadvantage is the extra implementation code required. The example returns false for canSupportSQL and leaves handling of WHERE clauses to the ArcGIS framework.

A plug-in workspace helper can implement IPlugInMetadata or IPlugInMetadataPath to support metadata. Implement IPlugInMetadata if the data source has its own metadata engine; this interface allows metadata to be set and retrieved as property sets. Otherwise, implement IPlugInMetadataPath; it allows the plug-in to specify a metadata file for each dataset. ArcGIS uses these files for storing metadata. Implement one of these interfaces for successful operation of the Export Data command in ArcMap. This command uses the FeatureDataConverter object, which relies on metadata capabilities of data sources.

A plug-in workspace helper can also implement the optional interfaces IPlugInWorkspaceHelper2 and IPlugInLicense.

A plug-in dataset helper class must implement the IPlugInDatasetInfo and IPlugInDatasetHelper interfaces and the Java class must be annotated with @ArcGISExtension. It does not need to be publicly creatable, as a plug-in workspace helper is responsible for creating it. See the following illustration:

IPlugInDatasetInfo provides information about the dataset so that the user interface can represent it. For example, ArcCatalog uses this interface to display an icon for the dataset. To enable fast browsing, it is important that the class have a low creation overhead. In the Simple point plug-in data source example, the SimplePointDataset class can be created and all the information for IPlugInDatasetInfo derived without opening the data file.

//Note the annotation

@ArcGISExtension

//Note the interfaces implemented by PlugIn dataset helper

public class SimplePointDatasetHelper implements IPlugInDatasetHelper,

IPlugInDatasetInfo{

//Need to implement methods

}

IPlugInDatasetHelper provides more information about the dataset and methods to access the data. If the dataset is a feature dataset (that is, it contains feature classes), all the feature classes are accessed via a single instance of this class. Many of the interface members have a ClassIndex parameter that determines which feature class is being referenced.

IPlugInDatasetHelper.getfields() defines the columns of the dataset. For the SimplePoint data source, all datasets have four fields: Object ID; Shape; and two attribute fields that, in the example, are arbitrarily named ColumnOne and Extra. When implementing fields, the spatial reference of the dataset must be defined. In the example, for simplicity, an UnknownCoordinateSystem is used. If the spatial reference is a geographic coordinate system, put the extent of the dataset into the IGeographicCoordinateSystem2.setExtentHint() method before setting the domain of the spatial reference. Setting the domain first can cause problems with projections and export. See the following code example:

/**

* Returns the fields collection for the class at the specified index.

*/

public IFields getFields(int arg0)throws IOException, AutomationException{

// Create a new feature class description and get its required fields.

IObjectClassDescription objClassDesc = new FeatureClassDescription();

IFields fields = objClassDesc.getRequiredFields();

IFieldEdit2 fieldsEdit = new Field();

fieldsEdit.setLength(1);

fieldsEdit.setName("ColumnOne");

fieldsEdit.setType(esriFieldType.esriFieldTypeString);

// Add a string column with a length of 1.

IFieldEdit2 fieldsEdit2 = new Field();

fieldsEdit2.setName("Extra");

fieldsEdit2.setType(esriFieldType.esriFieldTypeInteger);

IFieldsEdit fieldsedit3 = (IFieldsEdit)fields;

fieldsedit3.addField(fieldsEdit);

fieldsedit3.addField(fieldsEdit2);

// Set shape field geometry definition

if (this.getDatasetType() != esriDatasetType.esriDTTable){

IField field = fields.getField(fields.findField("Shape"));

fieldsEdit = (IFieldEdit2)field;

IGeometryDefEdit geomDefEdit = (IGeometryDefEdit)field.getGeometryDef();

geomDefEdit.setGeometryType(geometryTypeByID(arg0));

geomDefEdit.setSpatialReferenceByRef(this.getSpatialReference());

}

return fields;

}

There are three similar members of IPlugInDatasetHelper that all open a cursor on the dataset: fetchAll, fetchByEnvelope, and fetchByID . In the example, all these methods create a new plug-in cursor helper and initialize it with various parameters that control the operation of the cursor. The following code example shows the implementation of fetchByEnvelope:

/**

* Gets all of the records in the specified envelope, or all records if the envelope is null.

*/

public IPlugInCursorHelper fetchByEnvelope(int arg0, IEnvelope arg1, boolean arg2,

String arg3, Object arg4)throws IOException, AutomationException{

try{

IEnvelope boundEnv = this.getBounds();

boundEnv.project(arg1.getSpatialReference());

if (boundEnv.isEmpty())

return null;

if (blnDebug)

System.out.println("debug dataset 2: " + arg2);

// Get the fields for the specified class and return a cursor helper.

IFields filelds = this.getFields(arg0);

IPlugInCursorHelper pluginCurHelper = new SimplePointCursorHelper

(this.fullPath, filelds, - 1, (int[])arg4, null);

return pluginCurHelper;

}

catch (Exception ex){

ex.printStackTrace();

return null;

}

}

Parameters required by the SimplePointCursor class (defined in the Simple point plug-in data source sample) are passed through the constructor. These three parameters are as follows:

- The field map that controls which attribute values are fetched by the cursor

- The query envelope, which determines which rows are fetched by the cursor

- The file path, which tells the cursor where the data is

The example ignores some of the fetchByEnvelope parameters, as ClassIndex applies only to feature classes within a feature dataset and WhereClause applies only to those data sources supporting ISQLSyntax. Also, strictSearch can be ignored since the example does not use a spatial index to perform its queries and always returns a cursor of features that strictly fall within the envelope.

There are other equally valid ways of implementing FetchByEnvelope, FetchByID, and FetchAll. Depending on the data source, it may be more appropriate to create the cursor helper, then use a postprocess to filter the rows to be returned.

There is one more member of IPlugInDatasetHelper that is worth mentioning. The getBounds method returns the geographic extent of the dataset. Many data sources have the extent recorded in a header file, in which case implementing Bounds is easy. However, in the example, a cursor on the entire dataset must be opened and a minimum bounding rectangle gradually built. The implementation makes use of IPlugInCursorHelper. It would be unusual for another developer to consume the plug-in interfaces in this way, since once the data source is implemented, the normal geodatabase interfaces work with it in a read-only manner. With the getBounds() method, it is necessary to create a new envelope or clone a cached envelope. Problems can occur with projections if a class caches the envelope and passes out pointers to the cached envelope.

There is an optional interface IPlugInDatasetWorkspaceHelper2 which has a fetchByFilter method. If a plug-in implements this interface, this method will be called instead of fetchByEnvelope . fetchByFilter allows filtering by spatial envelope, where clause and by a set of OIDs (FIDSet).

This interface should be implemented like FetchByEnvelope, unless the FIDSet parameter is supplied. If the FIDSet is supplied, it contains a list of OIDs to return. The cursor should return only those rows whose OID is in the FIDSet, and which match the spatial filter and where clause.

A plug-in dataset helper should implement IPlugInFileSystemDataset, if the data source is file based and multiple files make up a dataset. Single-file and folder-based data sources do not need to implement this interface.

A plug-in dataset helper should implement IPlugInRowCount if the isRowCountIsCalculated method of the workspace helper returns false. Otherwise, this interface should not be implemented. If this interface is implemented, make sure it operates quickly. It should be faster than just opening a cursor on the entire dataset and counting.

A plug-in dataset helper can also implement the optional interfaces IPlugInFileOperations and IPlugInFileOperationsClass ; IPlugInIndexInfo and IPlugInIndexManager; and IPlugInLicense.

The IPlugInDatasetHelper2 interface can be implemented to optimize queries if there is a way to filter results from the data source more efficiently than checking the attributes of each row one-by-one. For example, data sources that have indexes should implement this interface to take advantage of indexing.

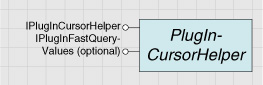

Implementing a plug-in cursor helper

The plug-in cursor helper deals with raw data and is normally the class that will contain the most code. The cursor helper represents the results of a query on the dataset. The class must implement the IPlugInCursorHelper interface but does not need to be publicly creatable, because the plug-in dataset helper is responsible for creating it. Also the Java class must be annotated with @ArcGISExtension annotation. See the following illustration:

//Notice the annotation

@ArcGISExtension

//Notice the implemented interfaces

public class SimplePointCursorHelper implements IPlugInCursorHelper{

//Implement the methods

}

nextRecord advances the cursor position. In the example, a new line of text is read from the file and stored in a string. As described in the previous section, the dataset helper defines the way the cursor operates; this is reflected in the example's implementation of NextRecord. If a record is being fetched by the Object ID, the cursor is advanced to that record. If a query envelope is specified, the cursor is moved on to the next record with a geometry that falls within the envelope. See the following code example:

//Declare the following instance variables

//The extent of the cursor's query.

IEnvelope queryEnv;

//Object ID for a cursor to fetch a single object.

long oid = - 1;

// The current row from the data file, in string format.

String currentRow;

// The reader used to get lines from the data source.

BufferedReader dataFile;

//Point geometry to work with

IGeometry workPoint;

// Indicates whether the cursor has iterated to completion.

boolean blnFinished = false;

//The source dataset's fields collection.

IFields fields;

// The spatial reference of the data and this cursor.

private ISpatialReference dataSpatial;

// This flag is used for debugging purpose

private boolean blnDebug = true;

/**

* Fetches the next record from the data source. If the end of the data has previously been reached,

* a null value is returned, and if the end of the data is reached on this call an AutomationException

* is thrown - this lets the framework know that the end of the data has been reached.

*/

public void nextRecord()throws IOException, AutomationException{

// We will take the line number in the file to be the OID of the feature,

if (blnDebug)

System.out.println("nextRecord is called");

// If we are searching by OID, skip to the correct line

if (blnFinished){

//error already thrown once

return ;

}

// If an OID-specific search was performed and the current row is not null (meaning a row

// with a matching OID has been found), stop reading the file, flag the cursor as finished and

// throw an AutomationException to let the client know that there are no more records.

if (oid > - 1 && currentRow != null){

if (blnDebug)

System.out.println("nextRecord() with OID");

this.dataFile.close();

blnFinished = true;

AutomationException orefAE = new AutomationException(1,

"SPTCursorHelper.java", "Specified row found");

throw orefAE;

}

else{

// Read the next row of the data source.

currentRow = readFile(dataFile, (int)oid);

if (blnDebug)

System.out.println("SPTCursorHelper.nextRecord() with readline ");

// If no row is returned, the end of the data has been reached. Close the file, flag the

// cursor as finished, and throw an AutomationException.

if (currentRow == null){

//finish reading, close the stream reader so resources will be released

this.dataFile.close();

blnFinished = true;

if (blnDebug)

System.out.println("Print: End of SimplePoint Plugin cursor");

AutomationException orefAE = new AutomationException(1,

"SPTCursorHelper.java", "No shape available");

throw orefAE;

}

// If a row has been found, check that it's geometry is within the spatial extent of the query.

// This step is optional (it can be performed by the client) but will improve performance.

else if (queryEnv != null && !(queryEnv.isEmpty())){

if (blnDebug)

System.out.println("nextRecord() with Envelope");

//method defined in the next section of the doc

this.queryShape(workPoint);

IRelationalOperator relOp = (IRelationalOperator)workPoint;

if (!relOp.disjoint((IGeometry)queryEnv))

// The geometry is within the query's extent.

return ;

else

// The geometry is outside the query's extent, continue iterating to the next record.

this.nextRecord();

}

}

}

Implementation of nextRecord must raise an error if there are no more rows to fetch.

For this PlugInCursorHelper, only setting the coordinates of the point feature is needed. See the following code example:

/**

* Gets the shape of the current record.

*/

public void queryShape(IGeometry arg0)throws IOException, AutomationException{

if (blnDebug)

System.out.println("SPTCursoHelper.queryShape()" + blnFinished);

if (arg0 == null){

return ;

}

try{

Point point = new Point(arg0);

double x, y;

// Parse the X and Y values out of the current row and into the geometry

x = Double.valueOf(currentRow.substring(0, 6)).doubleValue();

y = Double.valueOf(currentRow.substring(6, 12)).doubleValue();

point.putCoords(x, y);

arg0 = (IGeometry)point;

// Note - in our case there is no need to handle the strictSearch test for a cursor

// created with FetchByEnvelope. We have already tested that the feature is within

// the envelope on the NextRecord call, so there is no possibility of the test

// failing here.

}

catch (Exception ex){

arg0.setEmpty();

}

}

For data sources with complex geometries, the performance of QueryShape can be improved by using a shape buffer. Use IESRIShape.AttachToESRIShape to attach a shape buffer to the geometry. This buffer should then be reused for each geometry.

The ESRI white paper, ESRI Shapefile Technical Description , can be referenced for more information on shape buffers, since shapefiles use the same shape format.

queryValues() returns the attributes of the current record. The field map (specified when the cursor was created) dictates which attributes to fetch. This is designed to improve performance by reducing the amount of data transfer; for example, when features are being drawn on the map, it is likely that only a small subset, or even none of the attribute values, will be required. The return value of QueryValues is interpreted by ArcGIS as the ObjectID of the feature. See the following code example:

/**

* Gets the attribute values of the current row. A return value of -1 indicates

* the attributes could not be retrieved.

*/

public int queryValues(IRowBuffer arg0)throws IOException, AutomationException{

if (blnDebug)

System.out.println(blnFinished);

// If a current row does not exist or is empty, indicate failure.

if (currentRow == null || currentRow.length() <= 0)

return - 1;

// If any exceptions occur while retrieving the attribute values, catch it and

// return a value of -1.

try{

// Iterate through each field in the field map.

for (int i = 0; i < this.fieldMap.length; i++){

if (blnDebug)

System.out.print(" " + fieldMap[i] + " ");

// A value of -1 in a field map indicates the field has not been requested.

if (this.fieldMap[i] == - 1)

continue;

// There are two attributes in this format, a numeric attribute represented

// by two characters (positions 12 and 13), and a text attribute with a

// length of 1 (position 14). This code will extract them from the current row.

// Get the type of the current field and apply the appropriate attribute value.

IField irefFld = this.fields.getField(i);

int iType = irefFld.getType();

String numAtt = currentRow.substring(12, 14);

String szAtt = currentRow.substring(currentRow.length() - 1,

currentRow.length());

switch (iType){

// For numeric fields, apply the numeric attribute.

case esriFieldType.esriFieldTypeInteger:

case esriFieldType.esriFieldTypeDouble:

case esriFieldType.esriFieldTypeSmallInteger:

case esriFieldType.esriFieldTypeSingle:

arg0.setValue(i, Integer.valueOf(numAtt).intValue());

//get ascii code # for the character

break;

// For string fields, apply the string attribute.

case esriFieldType.esriFieldTypeString:

arg0.setValue(i, szAtt);

break;

}

}

// Return the current position of the cursor in the file.

return (int)cursorLineNum;

}

catch (Exception ex){

ex.printStackTrace();

return - 1;

}

}

Deploying PlugIn Data Sources

The above created Java classes must be compiled and their corresponding .class files are bundled into a JAR file. The JAR file is deployed to ArcGIS by placing the JAR file in the <ArcGIS Install Dir>\java\lib\ext folder. An ArcGIS application (ArcGIS Engine, ArcMap, ArcCatalog, ArcGIS Server) recognizes the plug-in data source when started based on the annotation of the class files. If the ArcGIS application is running, restart it after deploying the JAR file. If there are code modifications in the Java classes bundled in the JAR file, recreate and redeploy the JAR file, then restart the ArcGIS application after redeployment.

| Development licensing | Deployment licensing |

|---|---|

| ArcGIS for Desktop Basic | ArcGIS for Desktop Basic |

| ArcGIS for Desktop Standard | ArcGIS for Desktop Standard |

| ArcGIS for Desktop Advanced | ArcGIS for Desktop Advanced |

| Engine Developer Kit | Engine |

| Server | Server |