Summary

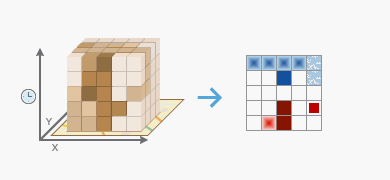

Identifies trends in the clustering of point densities (counts) or summary fields in a space time cube created using Create Space Time Cube. Categories include new, consecutive, intensifying, persistent, diminishing, sporadic, oscillating and historical hot and cold spots.

Learn more about how the Emerging Hot Spot Analysis tool works

Illustration

Usage

This tool can only accept netCDF files created by the Create Space Time Cube tool.

Each bin in the space-time cube has a LOCATION_ID, a time_step_ID, a COUNT value, and possible Summary Fields. Bins associated with the same physical location will share the same location ID and together will represent a time series. Bins associated with the same time-step interval will share the same time-step ID and together will comprise a time slice. The count value for each bin reflects the number of points that occurred at the associated location within the associated time-step interval.

This tool analyzes a variable in the netCDF Input Space Time Cube using a space-time implementation of the Getis-Ord Gi* statistic.

The Output Features will be added to the Table Of Contents with rendering that summarizes results of the space-time analysis for all locations analyzed. If you specify a Polygon Analysis Mask, the locations analyzed will be those that fall within the analysis mask; otherwise, the locations analyzed will be those with at least one point for at least one time-step interval.

In addition to the Output Features, an analysis summary is written to the Results window. Right-clicking the Messages entry in the Results window and selecting View will display the analysis summary in a Message dialog box. If you execute this tool in the foreground, the analysis summary will also be displayed in the progress dialog box.

The Emerging Hot Spot Analysis tool can detect eight specific hot or cold spot trends: new, consecutive, intensifying, persistent, diminishing, sporadic, oscillating, and historical. See Learn more about how the Emerging Hot Spot Analysis tool works for output category definitions and additional information about the algorithms this tool employs.

To get a measure of the intensity of feature clustering, this tool uses a space-time implementation of the Getis-Ord Gi* statistic, which considers the value for each bin within the context of the values for neighboring bins. A bin is considered a neighbor if its centroid falls within the Neighborhood Distance and its time interval is within the Neighborhood Time Step you specify. When you do not provide a Neighborhood Distance value, one is calculated for you based on the spatial distribution of your point data. When you do not provide a Neighborhood Time Step value, the tool uses the default value, which is 1 time-step interval.

To determine which bins will be included in each analysis neighborhood, the tool first finds neighboring bins that fall within the specified Neighborhood Distance. Next, for each of those bins, it includes bins at those same locations from N previous time steps, where N is the Neighborhood Time Step value you specify.

The Neighborhood Time Step value is the number of time-step intervals to include in the analysis neighborhood. If the time-step interval for your cube is three months, for example, and you specify 2 for the Neighborhood Time Step, all bin counts within the Neighborhood Distance, and all of their associated bins for the previous two time-step intervals (covering a nine-month time period) will be included in the analysis neighborhood.

The Polygon Analysis Mask feature layer may include one or more polygons defining the analysis study area. These polygons indicate where point features could possibly occur and should exclude areas where points would be impossible. If you were analyzing residential burglary trends, for example, you might use the Polygon Analysis Mask to exclude a large lake, regional parks, or other areas where there aren't any homes.

The Polygon Analysis Mask is intersected with the extent of the Input Space Time Cube and will not extend the dimensions of the cube.

Running Emerging Hot Spot Analysis adds some analysis results back to the netCDF Input Space Time Cube. Three analyses are performed:

- Each bin is analyzed within the context of neighboring bins to measure how intense clustering is for both high and low values. The result from this analysis is a z-score, p-value, and binning category for every bin in the space-time cube.

- The time series of these z-scores at locations analyzed is then assessed using the Mann-Kendall statistic. The result from this analysis is a clustering trend z-score, p-value, and binning category for each location.

- Finally, the time series of the values at locations analyzed is assessed using the Mann-Kendall statistic. The result from this analysis is a trend z-score, p-value, and binning category for each location.

A summary of the variables added to the Input Space Time Cube is given below:

Variable Name Description Dimension EMERGING_{ANALYSIS_VARIABLE}_HS_PVALUE

Getis-Ord Gi* statistic p-value measuring the statistical significance of high value (hot spot) and low value (cold spot) clustering.

Three-dimensions: one p-value for every bin in the space time cube.

EMERGING_{ANALYSIS_VARIABLE}_HS_ZSCORE

Getis-Ord Gi* statistic z-score measuring the intensity of high value (hot spot) and low value (cold spot) clustering.

Three-dimensions: one z-score for every bin in the space time cube.

EMERGING_{ANALYSIS_VARIABLE}_HS_BIN

The result category used to classify each bin as a statistically significant hot or cold spot value. The bin is based on an FDR correction.

- -3: cold spot, 99 percent confidence

- -2: cold spot, 95 percent confidence

- -1: cold spot, 90 percent confidence

- 0: not a statistically significant hot or cold spot

Three-dimensions: one binning category for every bin in the space time cube. The bin is based on an FDR correction.

- 1: hot spot, 90 percent confident

- 2: hot spot, 95 percent confident

- 3: hot spot, 99 percent confident

{ANALYSIS_VARIABLE}_TREND_PVALUE

The Mann-Kendall p-value measuring statistical significance of the trend in values at a location.

Two-dimensions: one p-value for each location analyzed.

{ANALYSIS_VARIABLE}_TREND_ZSCORE

The z-score measuring the Mann-Kendall trend, up or down, associated with the values at a location. A positive z-score indicates an upward trend; a negative z-score indicates a downward trend.

Two-dimensions: one z-score for each location analyzed.

{ANALYSIS_VARIABLE}_TREND_BIN

The result category used to classify each location as having a statistically significant upward or downward trend for the values.

- -3: down trend, 99 percent confidence

- -2: down trend, 95 percent confidence

- -1: down trend, 90 percent confidence

- 0: no significant trend

Two-dimensions: one binning category for each location analyzed.

- 1: up trend, 90 percent confident

- 2: up trend, 95 percent confident

- 3: up trend, 99 percent confident

EMERGING_{ANALYSIS_VARIABLE}_TREND_PVALUE

The Mann-Kendall p-value measuring statistical significance of the trend in hot/cold spot z-scores at a location.

Two-dimensions: one p-value for each location analyzed.

EMERGING_{ANALYSIS_VARIABLE}_TREND_ZSCORE

The z-score measuring the Mann-Kendall trend, up or down, associated with the trend in hot/cold spot z-scores at a location. A positive z-score indicates an upward trend; a negative z-score indicates a downward trend.

Two-dimensions: one z-score for each location analyzed.

EMERGING_{ANALYSIS_VARIABLE}_TREND_BIN

The result category used to classify each location as having a statistically significant upward or downward trend for hot/cold spot z-scores.

- -3: down trend, 99 percent confidence

- -2: down trend, 95 percent confidence

- -1: down trend, 90 percent confidence

- 0: no significant trend

Two-dimensions: one binning category for each location analyzed.

- 1: up trend, 90 percent confident

- 2: up trend, 95 percent confident

- 3: up trend, 99 percent confident

EMERGING_{ANALYSIS_VARIABLE}_CATEGORY

One of 16 categories, 1 to 8, 0, and -1 to -8.

- 1, new hot spot

- 2, consecutive hot spot

- 3, intensifying hot spot

- 4, persistent hot spot

- 5, diminishing hot spot

- 6, sporadic hot spot

- 7, historical hot spot

- 0, no trend detected

Two-dimensions: one category for each location analyzed.

- -1, new cold spot

- -2, consecutive cold spot

- -3, intensifying cold spot

- -4, persistent cold spot

- -5, diminishing cold spot

- -6, sporadic cold spot

- -7, historical cold spot

Syntax

EmergingHotSpotAnalysis_stpm (in_cube, analysis_variable, output_features, {neighborhood_distance}, {neighborhood_time_step}, {polygon_mask})| Parameter | Explanation | Data Type |

in_cube | The netCDF cube to be analyzed. This file must have an (.nc) extension and must have been created using the Create Space Time Cubetool. | File |

analysis_variable | The numeric variable in the netCDF file you want to analyze. | String |

output_features | The output feature class results. This feature class will be a two-dimensional map representation of the hot and cold spot trends in your data. It will show, for example, any new or intensifying hot spots. | Feature Class |

neighborhood_distance (Optional) | The spatial extent of the analysis neighborhood. This value determines which features are analyzed together in order to assess local space-time clustering. | Linear Unit |

neighborhood_time_step (Optional) | The number of time-step intervals to include in the analysis neighborhood. This value determines which features are analyzed together in order to assess local space-time clustering. | Long |

polygon_mask (Optional) | A polygon feature layer with one or more polygons defining the analysis study area. You would use a polygon analysis mask to exclude a large lake from the analysis, for example. Bins defined in the Input Space Time Cube that fall outside of the mask will not be included in the analysis. | Feature Layer |

Code Sample

EmergingHotSpotAnalysis example 1 (Python window)

The following Python window script demonstrates how to use the EmergingHotSpotAnalysis tool.

arcpy.env.workspace = r"C:\STPM"

arcpy.EmergingHotSpotAnalysis_stpm("Homicides.nc", "COUNT", "EHS_Homicides.shp", "5 Miles", 2, "#")

EmergingHotSpotAnalysis example 2 (stand-alone Python script)

The following stand-alone Python script demonstrates how to use the EmergingHotSpotAnalysis tool.

# Create Space Time Cube of homicide incidents in a metropolitan area

# Import system modules

import arcpy

# Set property to overwrite existing output, by default

arcpy.env.overwriteOutput = True

# Local variables...

workspace = r"C:\STPM"

try:

# Set the current workspace (to avoid having to specify the full path to the feature

# classes each time)

arcpy.env.workspace = workspace

# Create Space Time Cube of homicide incident data with 3 months and 3 miles settings

# Process: Create Space Time Cube

cube = arcpy.CreateSpaceTimeCube_stpm("Homicides.shp", "Homicides.nc", "MyDate", "#",

"3 Months", "End time", "#", "3 Miles")

# Create a polygon that defines where incidents are possible

# Process: Minimum Bounding Geometry of homicide incident data

arcpy.MinimumBoundingGeometry_management("Homicides.shp", "bounding.shp", "CONVEX_HULL",

"ALL", "#", "NO_MBG_FIELDS")

# Emerging Hot Spot Analysis of homicide incident cube using 5 Miles neighborhood

# distance and 2 neighborhood time step to detect hot spots

# Process: Emerging Hot Spot Analysis

cube = arcpy.EmergingHotSpotAnalysis_stpm("Homicides.nc", "COUNT", "EHS_Homicides.shp",

"5 Miles", 2, "bounding.shp")

except:

# If any error occurred when running the tool, print the messages

print(arcpy.GetMessages())

Environments

Licensing Information

- ArcGIS for Desktop Basic: Yes

- ArcGIS for Desktop Standard: Yes

- ArcGIS for Desktop Advanced: Yes